I am currently a Ph.D. student at KAIST, advised by Prof. Kyung-Soo Kim.

My research focuses on control and state estimation for legged robots, particularly on developing agile and dynamic motions for quadruped robots and humanoids. I’m also interested in reinforcement learning frameworks to enable robots to reason about their body dynamics and interactions with complex environments, ultimately achieving more adaptive and versatile behaviors.

If you have any questions or would like to discuss ideas, feel free to reach out via

email!

publications

*representative publications are highlighted in yellow.

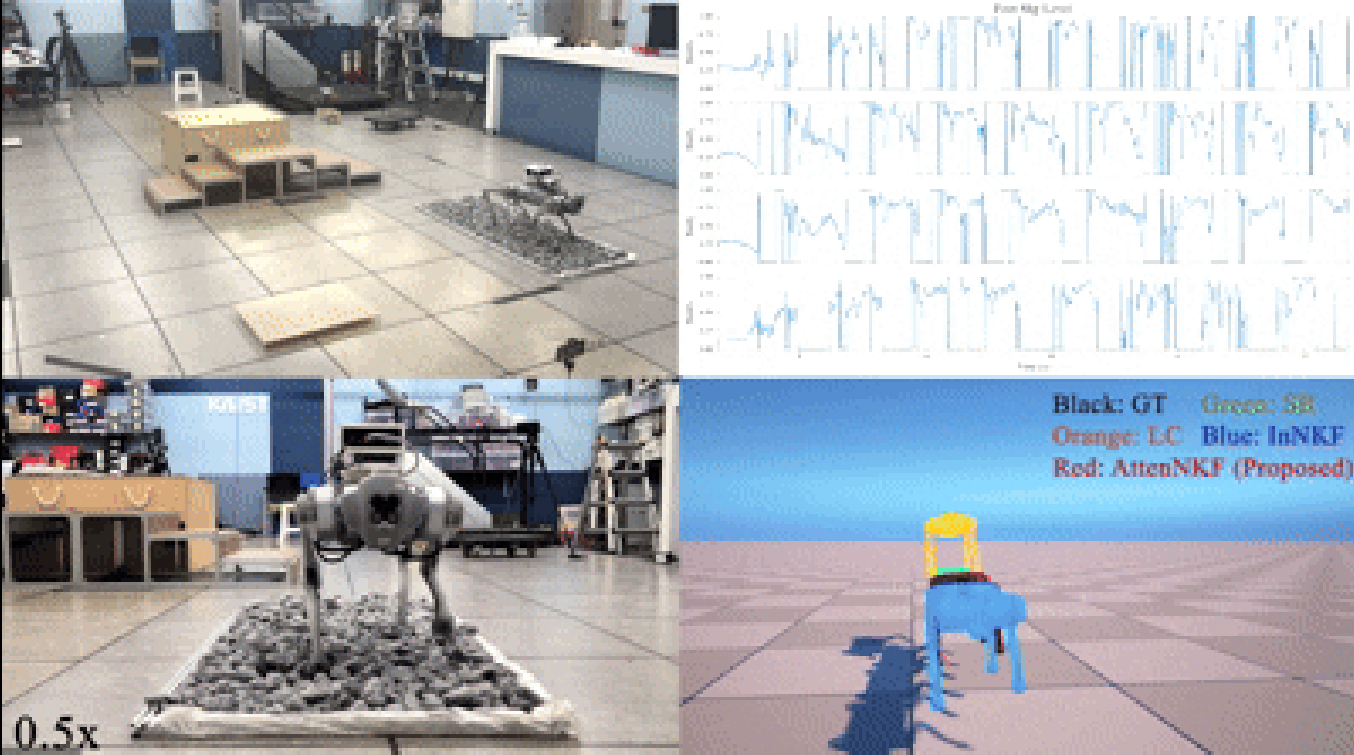

Attention-Based Neural-Augmented Kalman Filter for Legged Robot State Estimation

Seokju Lee, Kyung-Soo Kim

IEEE Robotics and Automation Letters (RA-L) (Under Review)

In this letter, we propose an Attention-Based Neural-Augmented Kalman Filter (AttenNKF) for state estimation

in legged robots. Foot slip is a major source of estimation error: when slip occurs, kinematic measurements

violate the no-slip assumption and inject bias during the update step. Our objective is to estimate this

slip-induced error and compensate for it. To this end, we augment an Invariant Extended Kalman Filter (InEKF)

with a neural compensator that uses an attention mechanism to infer error conditioned on foot-slip severity

and then applies this estimate as a post-update compensation to the InEKF state (i.e., after the filter

update). The compensator is trained in a latent space, which aims to reduce sensitivity to raw input scales

and encourages structured slip-conditioned compensations, while preserving the InEKF recursion. Experiments

demonstrate improved performance compared to existing legged-robot state estimators, particularly under

slip-prone conditions.

Legged Robot State Estimation Using Invariant Neural-Augmented Kalman Filter with a Neural

Compensator

Seokju Lee, Hyun-Bin Kim, Kyung-Soo Kim

The 2025 IEEE/RSJ International Conference on Intelligent Robots and Systems

(IROS)

This paper presents an algorithm to improve state estimation for legged robots. Among existing model-based

state estimation methods for legged robots, the contact-aided invariant extended Kalman filter defines the

state on a Lie group to preserve invariance, thereby significantly accelerating convergence. It achieves more

accurate state estimation by leveraging contact information as measurements for the update step. However, when

the model exhibits strong nonlinearity, the estimation accuracy decreases. Such nonlinearities can cause

initial errors to accumulate and lead to large drifts over time. To address this issue, we propose

compensating for errors by augmenting the Kalman filter with an artificial neural network serving as a

nonlinear function approximator. Furthermore, we design this neural network to respect the Lie group structure

to ensure invariance, resulting in our proposed Invariant Neural-Augmented Kalman Filter (InNKF). The proposed

algorithm offers improved state estimation performance by combining the strengths of model-based and

learning-based approaches.

Text optimization with latent inversion for non-rigid image editing

Yunji Jung, Seokju Lee, Tair Djanibekov, Jong Chul Ye, Hyunjung Shim

Pattern Recognition Letters

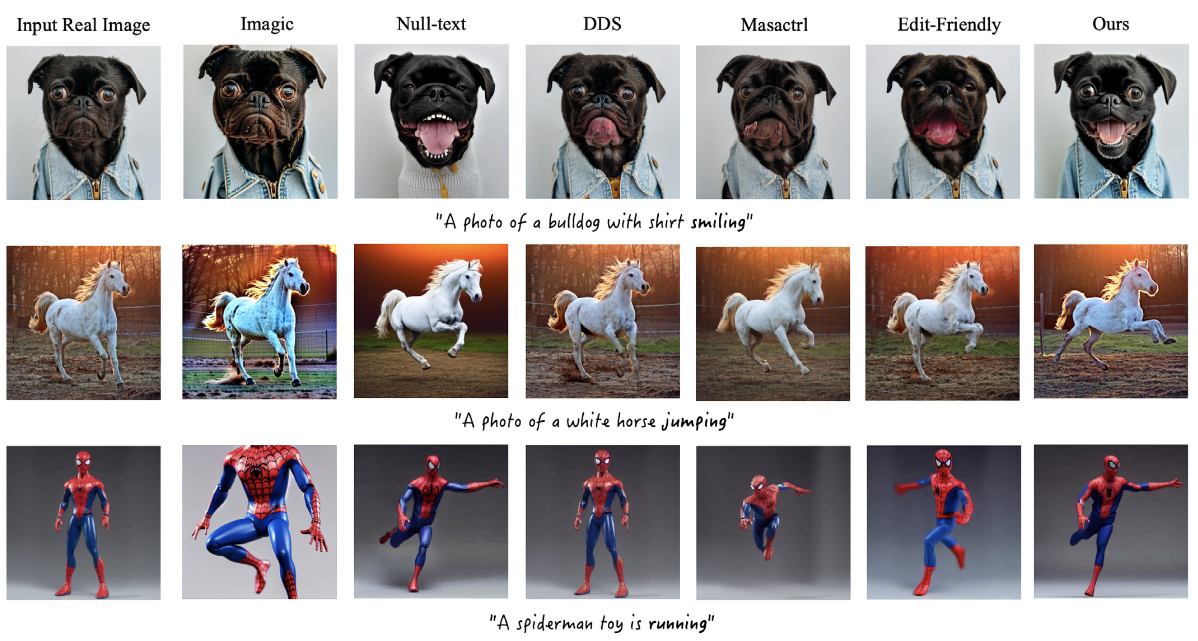

Text-guided non-rigid image editing involves complex edits for input images, such as changing motion or

compositions of the object (e.g., making a horse jump or adding candles on a cake). Since it requires

manipulating the structure of the object, existing methods often compromise “image identity”– defined as the

overall object appearance and background details – particularly when combined with Stable Diffusion. In this

work, we propose a new approach for non-rigid image editing with Stable Diffusion, aimed at improving the

image identity preservation quality without compromising editability. Our approach comprises three stages:

text optimization, latent inversion, and timestep-aware text injection sampling. Inspired by the success of

Imagic, we employ their text optimization for smooth editing. Then, we introduce latent inversion to preserve

the input image’s identity without additional model fine-tuning. To fully utilize the input reconstruction

ability of latent inversion, we employ timestep-aware text injection sampling, strategically injecting the

source text prompt in early sampling steps and then transitioning to the target prompt in subsequent sampling

steps. This strategic approach seamlessly harmonizes with text optimization, facilitating complex non-rigid

edits to the input without losing the original identity. We demonstrate the effectiveness of our method in

terms of identity preservation, editability, and aesthetic quality through extensive experiments. Our code is

available at https://github.com/YunjiJung0105/TOLI-non-rigid-editing

Temperature Compensation Method for a Six-Axis Force/Torque Sensor Using a Gated Recurrent Unit

Hyun-Bin Kim, Seokju Lee, Byeong-Il Ham, Keun-Ha Choi, Kyung-Soo Kim

IEEE Sensors Journal

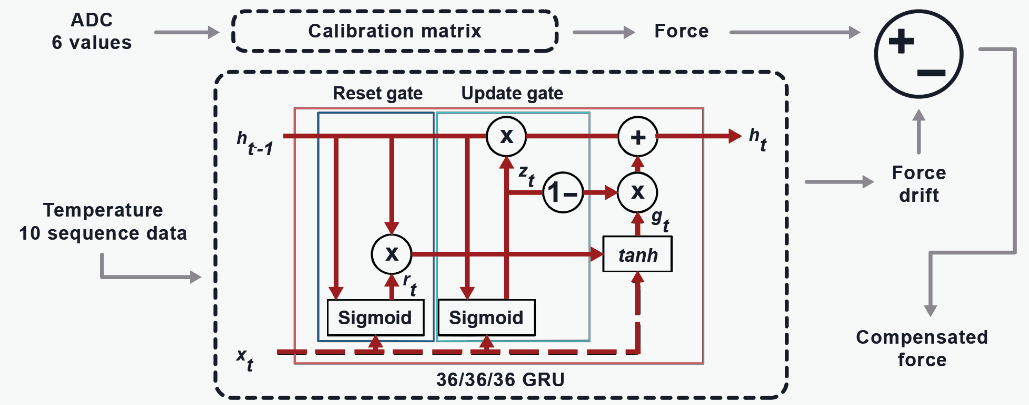

This study aims to enhance the accuracy of a six-axis force/torque (F/T) sensor by improving upon existing

approaches that use a multilayer perceptron (MLP) and the least-squares method (LSM). While previous research

has used MLPs for thermal compensation, it has not effectively addressed temperature-induced drift. The sensor

used in this study operates based on infrared light and incorporates a photocoupler, which makes it highly

sensitive to dark current effects, resulting in significant drift under temperature variations. Moreover, its

compact and lightweight design (45 g) leads to low thermal capacity, making it susceptible to rapid

temperature fluctuations even with minimal heat input, which in turn affects real-time performance. To address

these challenges, this study proposes a gated recurrent unit (GRU)-based method and compares it with the

conventional MLP approach. Experimental results demonstrate that the GRU-based model significantly reduces

drift from 26 to 2.7 N, and the root mean square error (RMSE) from 500 to 3—representing a 100fold

improvement. These findings suggest that GRU-based modeling substantially improves real-time F/T measurements

in temperature-sensitive environments, benefiting applications in robotics and precision instrumentation.

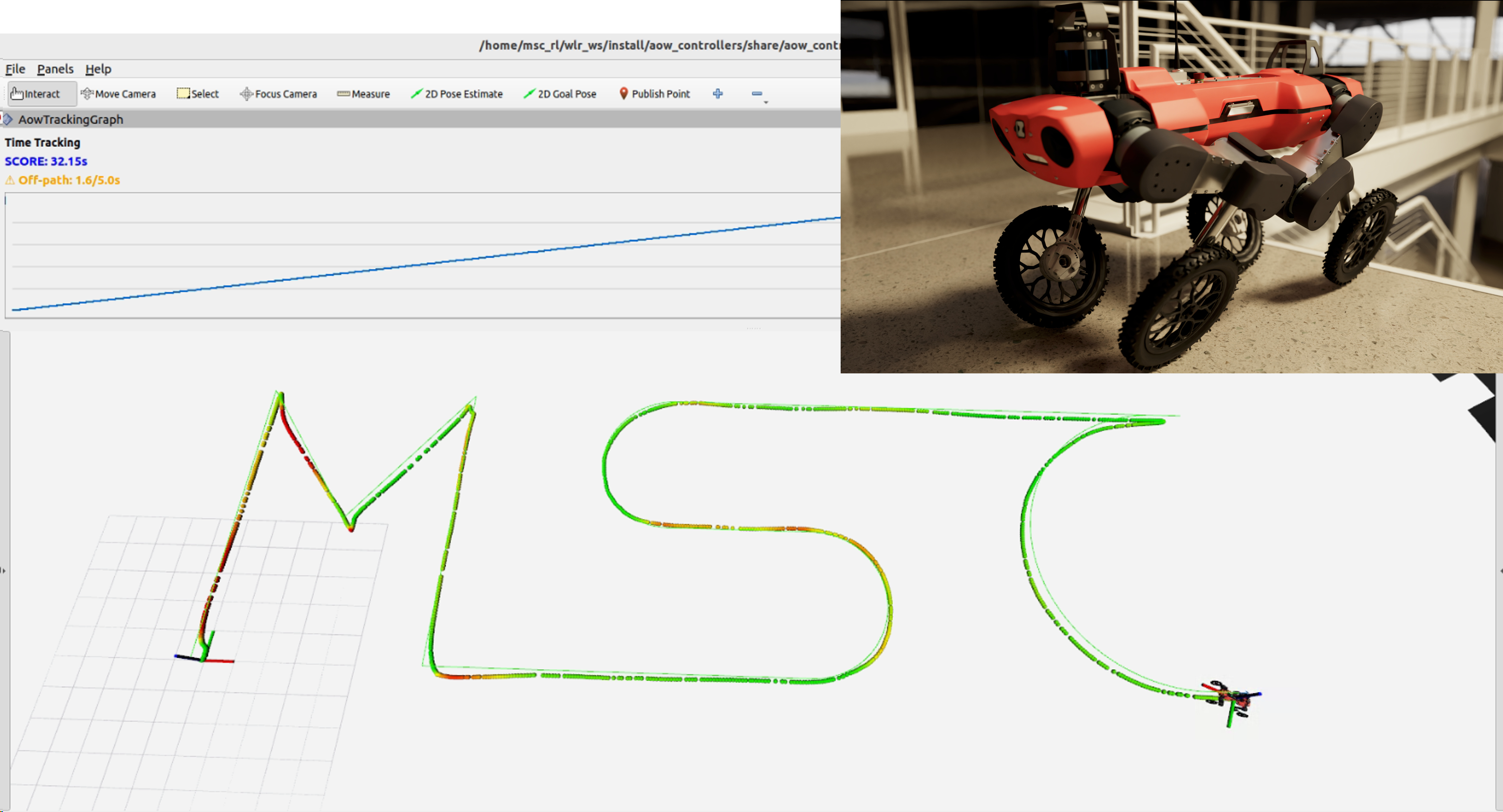

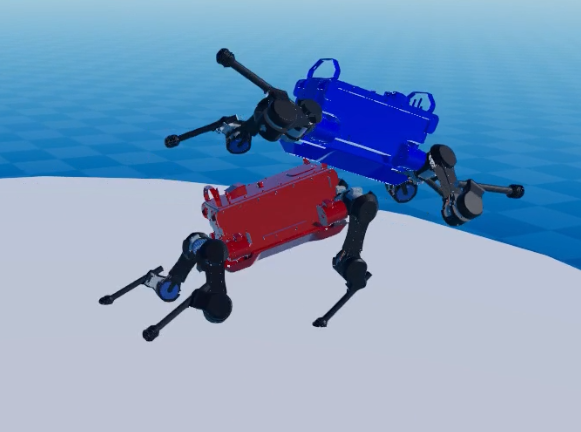

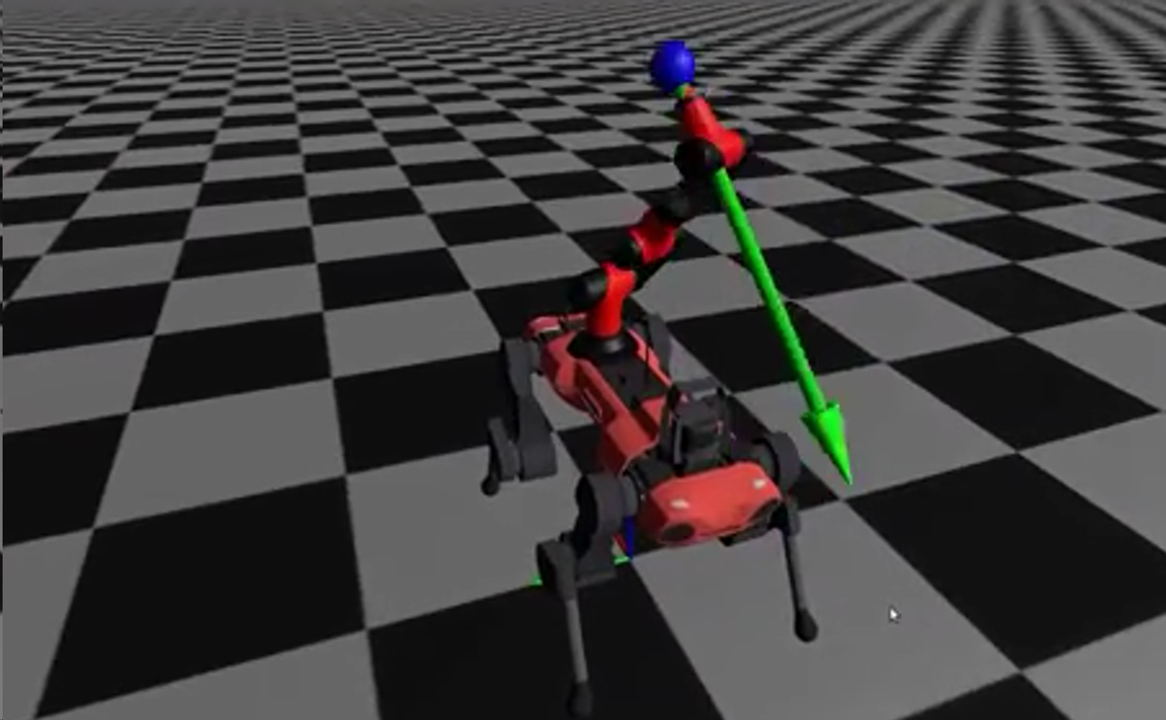

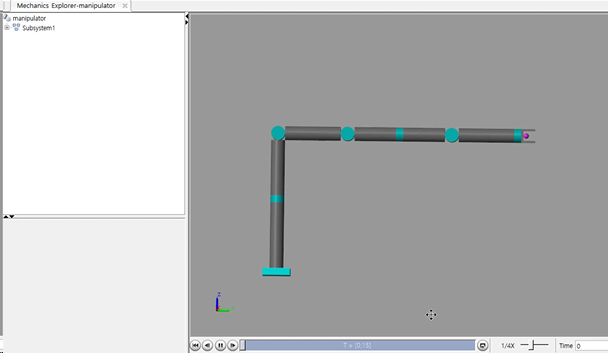

Learning legged mobile manipulation using reinforcement learning

Seokju Lee, Seunghun Jeon, Jemin Hwangbo

The 2022 International Conference on Robot Intelligence Technology and Applications

(RiTA)

Many studies on quadrupedal manipulators have been conducted for extending the workspace of the end-effector.

Many of these studies, especially the recent ones, use model-based control for the arm and learning-based

control for the leg. Some studies solely focused on model-based control for controlling both the base and arm.

However, model-based controllers such as MPC can be computationally inefficient when there are many contacts

between the end-effector and the object. The dynamics of the interactions between a quadrupedal manipulator

and the object in contact are complex and often unpredictable without high-resolution contact sensors on the

end-effector. In this study, we investigate the possibility of using a reinforcement learning strategy to

control an end-effector of a legged mobile manipulator. The proposed framework is verified for a walking and

tracking task of the end-effector in a simulation environment.

projects